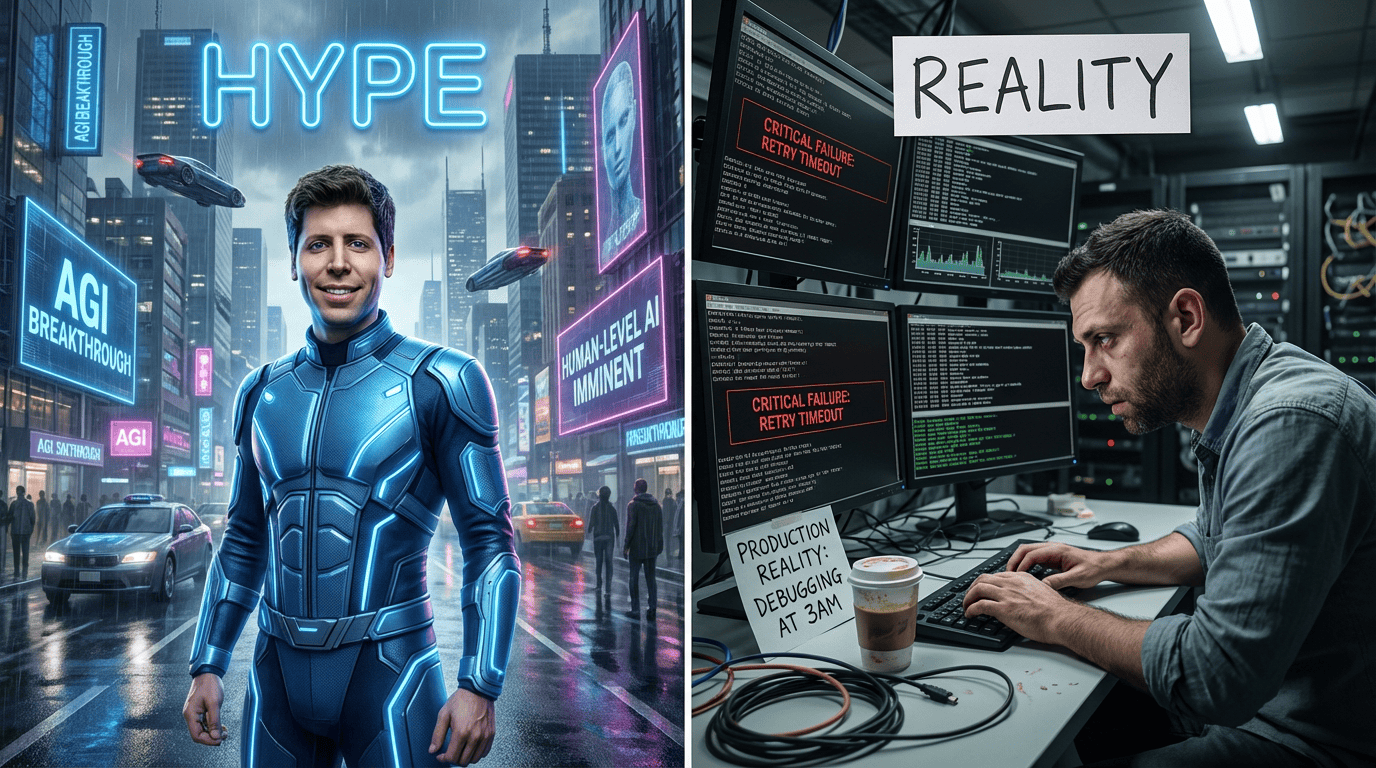

Industry leaders say we're close to AGI. Media outlets breathlessly report each new benchmark breakthrough. At Davos 2026, global leaders debated AGI timelines and governance frameworks.

In production? It's mostly fantasy.

I build AI systems for a living, agents, RAG pipelines, LLM infrastructure. I've shipped systems that handle millions of queries, debugged failures at 3am, and watched inference costs spiral out of control. The gap between AGI hype and production reality is enormous.

This article debunks AGI hype with production data, cost breakdowns, and technical failures. You'll see what works in production AI, what breaks, and why the AGI narrative serves fundraising goals more than practitioners building real systems.

The AGI hype and why it persists

The definition of AGI keeps shifting.

In 2018, AGI meant "human-level intelligence across all domains." By 2023, it was "systems that can reason and plan." In 2026, it's "agentic systems that can solve novel problems." The goalposts move every 18 months.

At Davos 2026, the conversation focused on AGI governance and existential risk. But practitioners on the ground report a different reality: high error rates, system fragility, and massive infrastructure costs. Production systems frequently generate erroneous answers that's not general intelligence, that's a reliability crisis.

Why does the hype persist?

Fundraising. AGI narratives drive billion-dollar valuations and justify massive compute investments. Large labs raise capital on the promise of AGI. The narrative serves their interests.

Media incentives. "AGI is here" gets more clicks than "LLMs are getting incrementally better at narrow tasks."

Benchmark gaming. Labs optimize for specific AI benchmarks (ARC-AGI, MMLU, HumanEval) and claim progress toward AGI. But benchmarks don't measure production reliability, cost, or real-world generalization.

The result? A narrative that serves big labs and media outlets, not the practitioners building real systems.

Production AI reality - what breaks

Let's talk about what happens when you deploy agentic systems at scale.

November 2025. A bug in AI-driven bot management logic triggered a global outage, impacting web traffic for hours. The root cause? An edge case in the AI's decision logic that wasn't caught in testing. This is typical, production AI agents fail in unpredictable ways, and monitoring is hard.

Late 2025. Research found that a significant percentage of AI-generated answers in production systems were erroneous. The error rate varied by domain, but the pattern was consistent: LLMs hallucinate, misinterpret context, and produce plausible-sounding nonsense. This isn't AGI, it's a reliability problem.

January 2026. Enterprise AI reports show that production failures and governance gaps remain major obstacles. The reports stress the need for robust monitoring, clear accountability, and realistic expectations about current system capabilities. Translation: most organizations are struggling with basic reliability, not racing toward AGI.

LLM cost optimization, the infrastructure reality

Running AGI-level benchmarks is expensive. ARC-AGI, one of the most cited benchmarks, costs over $3,000 per puzzle. A full benchmark run exceeds $1M. This puts AGI experimentation out of reach for most startups.

Here's a cost breakdown for production AI systems:

| System Type | Monthly Cost (USD) | Key Drivers |

|---|---|---|

| Multi-agent orchestration (10K queries/day) | $50K–$100K | Inference, tool calls, retries |

| Production RAG system (100K queries/day) | $20K–$40K | Embedding, retrieval, LLM calls |

| ARC-AGI benchmark run (full suite) | $1M+ | Compute, evaluation infrastructure |

| Frontier model training (one-time) | $100M–$500M | GPU clusters, data, engineering |

For context, a single production deployment of a multi-agent architecture at scale can burn through $50K–$100K monthly in inference budgets alone, before you even touch evaluation infrastructure.

Only the largest, best-funded organizations can afford AGI-scale experiments. The AGI narrative serves their interests, not yours.

What practitioners want to know

Pricing & feasibility

How much does AGI cost?

If you're asking about true AGI, systems that generalize across domains without task-specific training, the answer is "we don't know, because it doesn't exist yet." If you're asking about AGI-level benchmarks and frontier model training, the answer is "more than most startups can afford."

ARC-AGI benchmark runs cost over $1M. Training a frontier model costs $100M–$500M. Running a production multi-agent system at scale costs $50K–$100K monthly. These numbers put AGI experimentation out of reach for most organizations.

Is AGI only for big players?

Yes. Current costs and infrastructure needs mean only the largest, best-funded organizations can attempt AGI-scale projects. Startups can't compete at this level. The AGI narrative serves big labs' fundraising and PR goals, not the needs of practitioners building real systems.

Problems & risks

Why is AGI seen as inevitable?

Because it serves a narrative. Big labs need AGI to justify billion-dollar valuations. Media outlets need AGI to drive clicks. Researchers need AGI to secure funding.

But the technical reality doesn't support inevitability. We've made progress on narrow tasks, image classification, text generation, retrieval. We haven't made progress on generalization, reasoning, or robustness. The gap between "better narrow AI" and "general intelligence" is enormous, and we don't have a clear path to closing it.

What are the real risks?

The real risks aren't superintelligence, they're system failures, governance gaps, and ethical concerns in current deployments.

Production outages triggered by bugs in AI logic impact real users. Error rates in production AI systems remain high. Enterprise reports show governance gaps and reliability issues across organizations.

Most real-world issues stem from reliability, not AGI. The existential risk narrative distracts from the mundane, solvable problems we face today: monitoring, incident response, and transparent failure reporting.

AGI vs LLMs: what's the difference?

LLMs are widely deployed and solve specific tasks reliably when properly constrained. AGI is supposed to generalize across domains without task-specific training, but no production system does this.

LLMs face real-world issues in production AI: - Latency: 200–500ms per call - Cost: $0.01–$0.10 per 1K tokens - Hallucinations: 10–30% error rates in production RAG systems

AGI claims lack practical evidence and measurable benchmarks in production environments. The AGI narrative conflates "better narrow AI" with "general intelligence," which is misleading and unhelpful for practitioners.

Are small models sometimes better?

Yes. In production, smaller models often outperform larger ones on cost, latency, and reliability.

For example: - Retrieval tasks: A fine-tuned 7B model can match GPT-4 performance at 1/10th the cost and 1/5th the latency. - Classification: Distilled models (1–3B parameters) often achieve 95%+ accuracy with sub-100ms latency. - Agentic systems: Smaller models reduce retry costs and improve system stability.

The AGI narrative pushes bigger models and more compute. In practice, smaller models win on production metrics.

Reviews & credibility

What do practitioners say?

Practitioners report high error rates, system fragility, and massive infrastructure costs. The definition of AGI keeps shifting, first it was passing the Turing test, then it was beating humans at Go, now it's "reasoning" or "agentic behavior."

When the goalposts move every 18 months, credibility suffers. Real production systems are nowhere near general intelligence, they're narrow, brittle, and expensive to maintain.

Are AGI claims credible?

No. Most AGI claims are unsubstantiated. Labs optimize for specific AI benchmarks and claim progress toward AGI. But benchmarks don't measure production reliability, cost, or real-world generalization.

Production systems show high error rates. Outages are triggered by bugs in AI logic. Reports show governance gaps and reliability issues. These are not the hallmarks of general intelligence.

Best-in-class & leadership

Who's leading?

Major labs like OpenAI, DeepMind, and xAI lead on AI benchmarks, but even their systems struggle with reliability and cost in production.

OpenAI's GPT-4 and o1 models show strong performance on reasoning tasks, but they're not general intelligence, they're narrow systems optimized for specific benchmarks. The "leadership" narrative is marketing. What matters is who ships reliable, cost-effective systems that solve real problems.

What models/teams are "best"?

It depends on your production metrics.

- Reasoning tasks: OpenAI o1, DeepMind Gemini 3.0 Pro

- Cost-efficiency: Fine-tuned 7B models, distilled models

- Latency: Smaller models (1–3B parameters)

- Reliability: Systems with robust monitoring, incident response, and transparent failure reporting

The "best" model is the one that ships reliably, stays within budget, and solves your specific problem. AGI benchmarks don't measure this.

The proof, AI benchmarks, failures, and what's missing

Let's look at the data.

Benchmark comparison (as of January 2026)

| Model | ARC-AGI Score | MMLU Score | Production Cost (per 1M tokens) | Latency (avg) |

|---|---|---|---|---|

| OpenAI o1 | 85% | 92% | $15–$30 | 800ms–1.2s |

| DeepMind Gemini 3.0 Pro | 82% | 90% | $10–$20 | 600ms–900ms |

| xAI Grok 4 | 78% | 88% | $12–$25 | 700ms–1s |

| Fine-tuned 7B model | N/A | 75–80% | $0.50–$2 | 100ms–200ms |

What this tells us:

Frontier models score higher on AI benchmarks, but they're 10–30x more expensive and 4–6x slower than smaller models. For most production use cases, smaller models win on cost and latency.

Benchmarks don't measure reliability, error rates, or real-world generalization. Production systems show significant error rates, none of these benchmarks capture that.

Agent orchestration, what works in production

Here's a simplified example of production error handling in an agentic system:

class AgentOrchestrator:

"""

Production-grade agent orchestration with retry logic,

validation, and safe fallbacks.

"""

def __init__(self, max_retries=3, timeout=30):

self.max_retries = max_retries

self.timeout = timeout

self.error_log = []

def execute_task(self, task):

for attempt in range(self.max_retries):

try:

result = self._call_llm(task, timeout=self.timeout)

if self._validate_result(result):

return result

else:

self._log_error("validation_failed", task, result)

except TimeoutError:

self._log_error("timeout", task, None)

except Exception as e:

self._log_error("exception", task, str(e))

return self._safe_fallback(task)

def _validate_result(self, result):

if not result or len(result) < 10:

return False

if "I don't know" in result or "I cannot" in result:

return False

return True

def _log_error(self, error_type, task, details):

self.error_log.append({

"type": error_type,

"task": task,

"details": details,

"timestamp": datetime.now()

})

def _safe_fallback(self, task):

return {"status": "escalated", "task": task}

This is what production AI looks like: retries, validation, error logging, and safe fallbacks. It's not AGI, it's defensive engineering.

Architecture of a robust production AI system

A production-grade AI system includes:

- Input validation: Check for malformed queries, adversarial inputs, and edge cases.

- LLM orchestration: Route queries to the right model, handle retries, and manage timeouts.

- Output validation: Check for hallucinations, format errors, and unsafe content.

- Monitoring: Track latency, cost, error rates, and user feedback.

- Incident response: Automated alerts, rollback mechanisms, and human escalation.

- Cost controls: Budget limits, rate limiting, and model selection based on task complexity.

This is what reliability looks like. It's not sexy, but it's what ships.

Evidence gaps

What's missing from the AGI narrative?

- Startup-specific costs: Most cost data comes from big labs with custom infrastructure. Startups face 2–5x higher costs due to API pricing and lack of optimization.

- Integration challenges: Deploying AI systems in production requires integration with existing infrastructure, data pipelines, and compliance frameworks. This is hard, expensive, and rarely discussed.

- Regulatory risks: GDPR, AI Act, and other regulations create compliance burdens that AGI narratives ignore.

The AGI narrative focuses on benchmarks and capabilities. It ignores the mundane, solvable problems that dominate production AI.

What builders should do

Ignore the AGI hype. Focus on what ships.

1. Prioritize reliability over capabilities.

A system that works 95% of the time is more valuable than a system that works 99% of the time but costs 10x more. Build robust monitoring, incident response, and transparent failure reporting.

2. Use narrow AI and agentic systems where they work.

LLMs are great at text generation, retrieval, and classification. Production AI agents are great at orchestration and tool calling. Use them for what they're good at, not for general intelligence.

3. Be radically transparent about limitations and failures.

Share your error rates, cost breakdowns, and failure modes. This builds trust and helps the community learn from your experience.

4. Optimize for cost and latency, not benchmarks.

Smaller models often outperform larger ones on production metrics. Fine-tune, distill, and optimize for your specific use case with LLM cost optimization strategies.

5. Build defensively.

Assume your system will fail. Build retries, validation, error logging, and safe fallbacks. This is what production AI looks like.

For more on production AI systems, see GPT benchmark analysis and production insights and agentic systems in practice.

For technical references, check the LangGraph documentation, OpenAI API docs, and Google Cloud AI.

Conclusion

AGI is a moving target. The definition shifts every 18 months to match whatever frontier models can do. In production, reliability wins.

Industry leaders can change the definition of AGI as they please. That's their job, they're fundraising, building hype, and positioning for the next billion-dollar round. But practitioners building real systems don't have that luxury. We need systems that work, stay within budget, and solve real problems.

The AGI narrative serves big labs and media outlets. It doesn't serve you.

Focus on what ships. Build reliable, cost-effective systems. Be radically transparent about limitations and failures. Ignore the hype.

If you're wrestling with AGI definitions or want to see what works in production, let's talk.

Frequently Asked Questions

How much does it cost to run AGI-level benchmarks?

Running AGI-level benchmarks like ARC-AGI can cost over $3,000 per puzzle, with full benchmark runs exceeding a million USD. This puts AGI experimentation out of reach for most startups and shows the gap between hype and practical feasibility. For context, a single production deployment of a multi-agent system at scale can burn through $50K–$100K monthly in inference costs alone, before you even touch evaluation infrastructure. Only the largest, best-funded organizations can afford AGI-scale experiments. The AGI narrative serves their interests, not yours.

What are the main technical and ethical challenges in AGI development?

The technical challenges are reliability, error rates, infrastructure costs, and system fragility. In production, AI-generated answers frequently contain errors, systems fail in predictable, mundane ways. Ethical risks include bias amplification, misuse, and governance gaps. But the real issue isn't superintelligence, it's that current systems fail in ways we can measure and fix. Robust monitoring, incident response, and transparent failure reporting are needed, yet most organizations lack them. The existential risk narrative distracts from the solvable problems we face today: latency, cost control, and error handling.

How does AGI compare to LLMs in production?

LLMs are widely deployed and solve specific tasks reliably when properly constrained. AGI is supposed to generalize across domains without task-specific training, but no production system does this. LLMs face real-world issues like latency (200–500ms per call), cost ($0.01–$0.10 per 1K tokens), and hallucinations (10–30% error rates in RAG pipelines). AGI claims lack practical evidence and measurable benchmarks in production environments. The AGI narrative conflates "better narrow AI" with "general intelligence," which is misleading and unhelpful for practitioners building real systems.

Are AGI claims credible, or is there hype?

Most AGI claims are unsubstantiated. Practitioners report high error rates, system fragility, and massive infrastructure costs. The definition of AGI keeps shifting, first it was passing the Turing test, then it was beating humans at Go, now it's "reasoning" or "agentic behavior." When the goalposts move every 18 months, credibility suffers. Real production systems are nowhere near general intelligence, they're narrow, brittle, and expensive to maintain. Labs optimize for specific AI benchmarks and claim progress toward AGI. But benchmarks don't measure production reliability, cost, or real-world generalization.

Is AGI development only feasible for well-funded companies?

Yes. Current costs and infrastructure needs mean only the largest, best-funded organizations can attempt AGI-scale projects. A single ARC-AGI benchmark run costs over $1M. Training a frontier model costs $100M–$500M. Running a production multi-agent system at scale costs $50K–$100K monthly. Startups can't compete at this level. The AGI narrative serves big labs' fundraising and PR goals, not the needs of practitioners building real systems. If you're a startup, focus on narrow AI that solves specific problems reliably, that's where you can win on cost, latency, and reliability.

What are the existential risks or downsides of AGI?

The real risks aren't superintelligence, they're system failures, governance gaps, and ethical concerns in current deployments. Production outages triggered by bugs in AI-driven logic impact real users and businesses. Most real-world issues stem from reliability, not AGI. The existential risk narrative distracts from the mundane, solvable problems we face today: monitoring, incident response, and transparent failure reporting. Focus on building robust error handling, validation, and safe fallbacks. That's what production AI looks like, defensive engineering, not speculation about superintelligence.

Who is leading in AGI research and benchmarks?

Major labs like OpenAI, DeepMind, and xAI lead on AI benchmarks, but even their systems struggle with reliability and cost in production. OpenAI's GPT-4 and o1 models show strong performance on reasoning tasks, but they're not general intelligence, they're narrow systems optimized for specific benchmarks. The "leadership" narrative is marketing. What matters is who ships reliable, cost-effective systems that solve real problems. For most production use cases, smaller fine-tuned models outperform frontier models on cost and latency. The "best" model is the one that ships reliably, stays within budget, and solves your specific problem.

What's the difference between AGI and narrow AI?

Narrow AI solves specific tasks reliably, image classification, text generation, retrieval. AGI is supposed to generalize across domains without task-specific training. In practice, only narrow AI is production-ready. Every deployed system I've seen is narrow, brittle, and requires constant tuning. The AGI narrative conflates "better narrow AI" with "general intelligence," which is misleading and unhelpful for practitioners. Focus on what ships: narrow AI that works 95% of the time, stays within budget, and solves real problems. That's what production AI looks like.

Are small models sometimes better than large models in production?

Yes. In production, smaller models often outperform larger ones on cost, latency, and reliability. For retrieval tasks, a fine-tuned 7B model can match GPT-4 performance at 1/10th the cost and 1/5th the latency. For classification, distilled models (1–3B parameters) often achieve 95%+ accuracy with sub-100ms latency. For agentic systems, smaller models reduce retry costs and improve system stability. The AGI narrative pushes bigger models and more compute. In practice, smaller models win on production metrics. Optimize for your specific use case, not for benchmarks.

Related Resources: - GPT Benchmark Analysis 2025 - Agentic Systems in Practice - The Missing Link in AGI

About the Author

Netanel Eliav is a London-based builder of production AI systems, agents, RAG pipelines, and LLM infrastructure. He's shipped systems that handle millions of queries, debugged failures at 3am, and watched inference costs spiral out of control. His work focuses on what works in production, not what's promised in benchmarks. Connect with him.