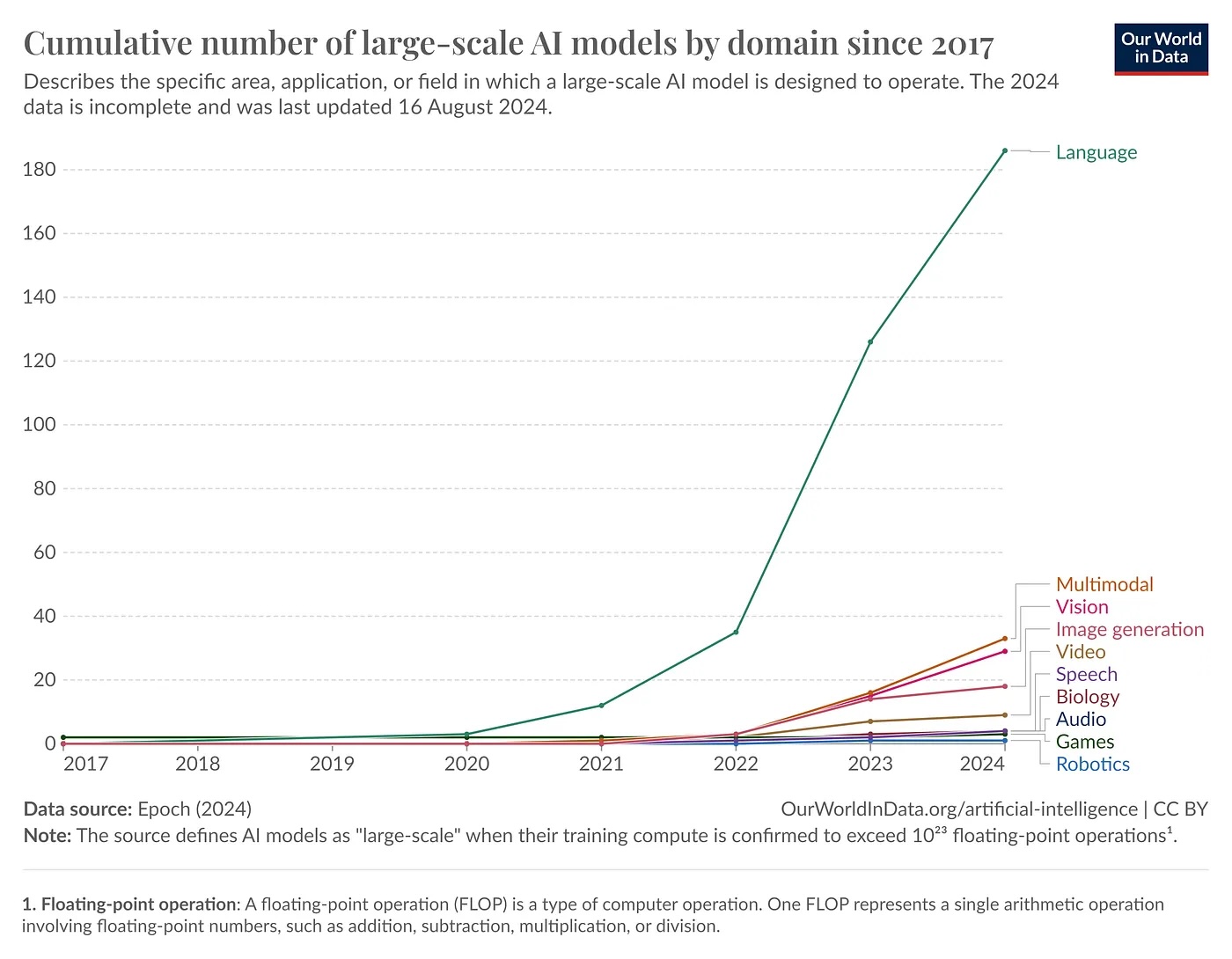

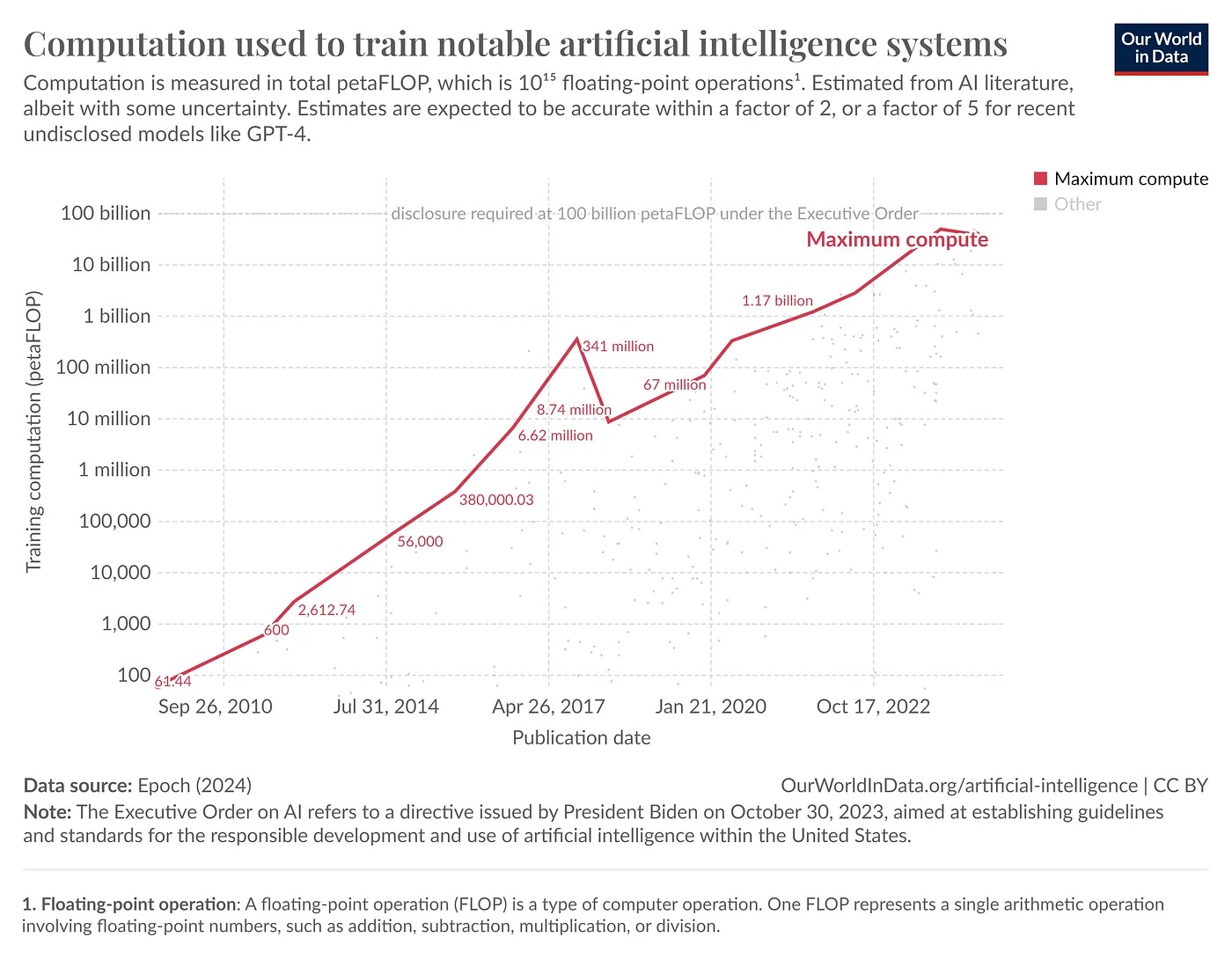

Let’s talk about the elephant in the room - the AI race. It’s moving at breakneck speed, with new models popping up faster than I can keep track of. But here’s the million-dollar question:

Can this pace actually continue?

I’m not convinced.

The Open-Source Revolution

Take Facebook, for instance. They’ve just dropped Llama 3.1, a beast of a model, and get this — it’s free. Yep, you heard that right. Meanwhile, the likes of OpenAI and Google are scrambling to keep up, pouring more and more cash into their next big thing. But at what cost? Zuckerberg’s move to open-source Llama 3.1 is pretty clever, if you ask me. He’s raised the bar, and now everyone else has to jump higher. It’s like he’s democratizing AI right under our noses.

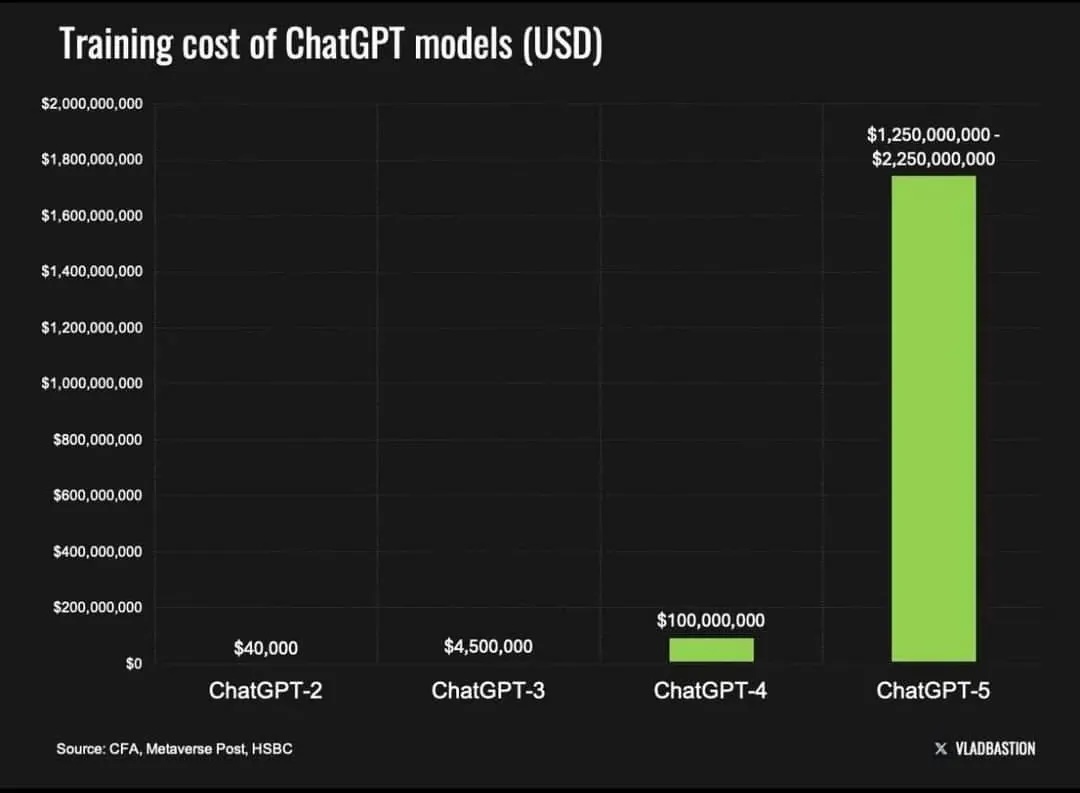

The Cost of Ambition

Rumor has it:

- GPT-5 could cost OpenAI around $40M to train

- GPT-6 could soar beyond $400M

It’s starting to look like a high-stakes poker game where the ante keeps doubling.

But while Big Tech throws millions into massive models, students and independent researchers are quietly fine-tuning smaller ones for niche tasks — and getting shockingly good results.

Platforms like Hugging Face are releasing models that challenge GPT-4 in specific domains. It’s David vs. Goliath, and let’s just say… David is getting bolder.

The Great AI Spend

Goldman Sachs projects that global AI spending could reach a mind-blowing $1 trillion in the coming years.

But will that investment actually pay off?

Even MIT’s Daron Acemoglu suggests that we’re still far from achieving truly groundbreaking productivity improvements.

The Price Paradox

Here’s where things get interesting.

While model development costs are skyrocketing, the cost of using AI is plummeting.

OpenAI recently dropped GPT-4 pricing to $4 per million tokens — a stunning 79% decrease year over year.

Why the price freefall?

- Open-source competition (Llama 3.1)

- Hardware breakthroughs (Groq, SambaNova)

- Chip wars between NVIDIA, AMD, Intel, Qualcomm

- Increasing efficiency and specialization

We’re witnessing a strange paradox:

AI has never been more expensive to build — and never cheaper to use.

Implications for AI Developers

So what does all this mean for builders?

First:

Don’t obsess over costs right now.

Your app might not be viable today, but the economics are changing so quickly that it could be viable tomorrow.

Second:

Even high-call workloads are becoming affordable as prices decline.

Focus on:

- Building something genuinely useful

- Staying adaptable as new models drop

- Switching models when cost-performance trends shift

Innovation matters more than optimization at this stage.

The Bottom Line

The AI war is chaotic, unpredictable, and moving at lightning speed. It could end in a spectacular crash for some major players. Or it could ignite a wave of innovation unlike anything we’ve seen.

Open-source is reshaping the battlefield.

Falling prices are opening new doors.

And the real race is shifting from who can build the biggest model to who can make it matter.

What’s Next? A WAR

Here’s the real question:

Are we witnessing an AI revolution — or just another evolution?

Think about the rise of cloud platforms like AWS, Azure, and GCP. They were hyped as world-changing, and while they did transform tech infrastructure, they ultimately settled into a critical but not earth-shattering role.

AI might follow a similar trajectory.

Instead of detonating the world as we know it, perhaps AI will become another powerful tool in the developer’s arsenal — indispensable, yet not apocalyptic.

Final Thoughts

So what do you think?

Is AI destined to reshape everything?

Or will it become just another essential layer in the tech stack?